As we saw in part 1 of this series, MySQL is pretty robust in the face of hard resets as long as you use InnoDB as the underlying engine rather than MyISAM. Heck, InnoDB even held together with the cache-setting “Writeback (unsafe)” in Proxmox chosen for the underlying disk. But an ib_logfile getting corrupted or going missing somehow leaves us in a pickle. Some percentage of tables are likely to be impossible to export if that happens. So we better set things up so that we have something to fall back on if that happens.

Let’s start with my favorite, Btrfs snapshots. Some background:Ext4 is pretty much the default file system for Linux today and it’s pretty darned good. I remember back in the day when we only had Ext2 and it was a big deal when Ext3 introduced journals that made recovery from crashes much faster. Now we’re at Ext4. Btrfs on the other hand is a completely new file system heavily inspired by ZFS. It’s Copy-on-Write amongst other things which is good for snapshots. So Ext4 is tried and tested and Btrfs feature-rich.

Let’s switch from Ext4 to Btrfs for a MySQL-deployment. Note that I’m completely wiping the data stored in MySQL here! This is a test-rig.

I’ll make cron run a script that makes snapshots of the MySQL data directory every five minutes. It could be done in a simpler way but my script cleans up old snapshots automatically based on an assigned lifespan which is handy. This is what we end up with after slightly less than an hour of running the standard workload-script and also taking snapshots every five minutes:

root@mysqlrecov1:/var/lib/mysql# ls -lh Snapshots/data

total 0

drwxr-xr-x 1 mysql mysql 388 Mar 21 22:54 data@auto-2020-03-21-2340_12h

drwxr-xr-x 1 mysql mysql 388 Mar 21 22:54 data@auto-2020-03-21-2345_12h

drwxr-xr-x 1 mysql mysql 388 Mar 21 22:54 data@auto-2020-03-21-2350_12h

drwxr-xr-x 1 mysql mysql 388 Mar 21 22:54 data@auto-2020-03-21-2355_12h

drwxr-xr-x 1 mysql mysql 388 Mar 21 22:54 data@auto-2020-03-22-0000_12h

drwxr-xr-x 1 mysql mysql 388 Mar 21 22:54 data@auto-2020-03-22-0005_12h

drwxr-xr-x 1 mysql mysql 388 Mar 21 22:54 data@auto-2020-03-22-0010_12h

drwxr-xr-x 1 mysql mysql 388 Mar 21 22:54 data@auto-2020-03-22-0015_12h

drwxr-xr-x 1 mysql mysql 388 Mar 21 22:54 data@auto-2020-03-22-0020_12h

drwxr-xr-x 1 mysql mysql 388 Mar 21 22:54 data@auto-2020-03-22-0025_12h

So let’s say something bad happened after the last snapshot was taken and we want to get data out of it. Can we just start a MySQL-instance with the snapshot directory as the datadir? No.

I make all my snapshots read-only because they’re meant to be backups. The more immutable they are the better. MySQL can’t run against a read only copy. But all we need to do is to make a writable copy of the read-only snapshot we’re interested in.

I guess I could have run mysqlcheck to demonstrate that things were okey but they were just fine. We could have taken whichever of these snapshots we wanted and gone back to that point in time. As much as I like this setup I do recognize some weaknesses.

First, backups are necessarily part of the same filesystem as the original. So file system corruption can leave us completely boned. There is a mechanism for sending snapshots from one Btrfs file system to another – even on another server – which can remedy that situation to some extent. But Btrfs snapshots are not great to write to tape however you twist and turn things.

Second, we’re not actually saving snapshots that MySQL gives guarantees for. MySQL has to do a sort of crash recovery process when starting up these snapshots because they look just like what you would get if the server had crashed unexpectedly and left lots of databases open.

One way to remedy this is to use a backuptool like xtrabackup. There’s a version for MariaDB called mariabackup that’s basically the same. I’ll use xtrabackup in this case. We’ll make three snapshots, consisting of one full backup and two incremental backups. Then we’ll do a restore by applying each incremental backup to the full backup and then moving the result into the place MySQL expects data to be.

This has the benefit of saving cleaner snapshots and you get them in distinct files that are easier to save to tape or backup to some external storage provider.

Now let’s try something a bit more robust, replication! I have that set up for my own databases but with an older method of keeping the various copies in sync. I’d like to try out GTID. I wonder if it’s enabled by default on this version of MySQL?

mysql> SHOW GLOBAL VARIABLES LIKE '%gtid%';

+----------------------------------+-------+

| Variable_name | Value |

+----------------------------------+-------+

| binlog_gtid_simple_recovery | ON |

| enforce_gtid_consistency | OFF |

| gtid_executed | |

| gtid_executed_compression_period | 1000 |

| gtid_mode | OFF |

| gtid_owned | |

| gtid_purged | |

| session_track_gtids | OFF |

+----------------------------------+-------+

8 rows in set (0.00 sec)

Nope. Okey, I wonder if I can add it after the fact? Well activating the feature after the fact can obviously be done, but will it work? Will slaves only see the modifications that happened after GTID was activated? We can test it. Let’s enable GTID and create a replication user.

Now for a tricky move. It’s not strictly necessary since we know writes aren’t going on but for the sake of doing things by the book, we flush all table and lock them in one terminal window and then dump all databases in another. As long as the “FLUSH TABLES WITH READ LOCK;”-session is open in MySQL the lock is held and the dump is consistent.

Then we copy the dump to mysqlrecov2 where we import it into a blank setup. Then we configure that server for replication with it’s own server-id. Then we set up the replication and run a test to see if it works. We should be able to run our testscript and find that the maximum id in the Purchase-table(for instance) is the same for mysqlrecov1 and mysqlrecov2.

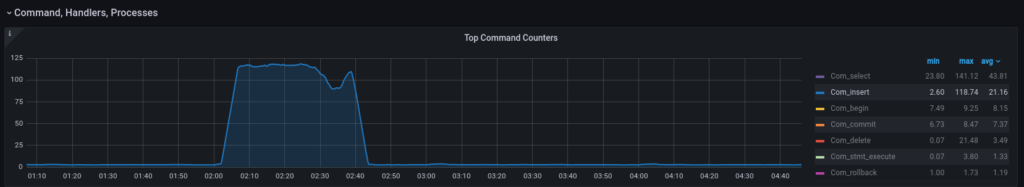

That seems to work fine. Let’s level this up one more notch. I’ll schedule snapshots to be made of the /var/lib/mysql/data directory every five minutes just like on mysqlrecov1 before. This will be a variant of what we did before. So the testscript is running against mysqlrecov1 for a while and mysqlrecov2 is replicating all the changes in real time. Snapshots are being stored in /var/lib/mysql/Snapshots/data/ on mysqlrecov2. There are actually good reasons for not storing snapshots on mysqlrecov1 which we can get to later. For now, let’s just note that the relay-log from mysqlrecov1 is ticking up nicely on mysqlrecov2:

Now let’s simulate a bonehead mistake. Something like deleting all customers in the database with an id below 5000? That should leave people quite sad. As we saw before we can restore from a Btrfs snapshot but we can lose up to 5 minutes of transactions. In fact, if we don’t recognize our bonehead mistake immediately and take MySQL down on mysqlrecov1 we could rack up even more transactions that will be lost when we go back to our earlier state.

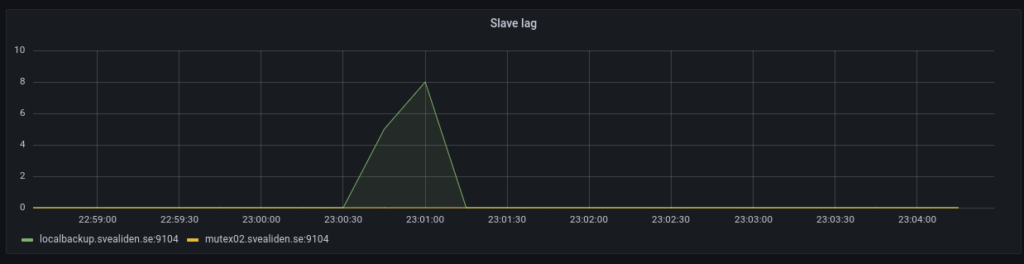

Replication worked just as advertised. Within milliseconds of me deleting a bunch of customers at random on mysqlrecov1, mysqlrecov2 followed suit. As SHOW SLAVE STATUS illustrated at the end, I kept the testscript running for a while longer so more and more gtid_sets were stored on mysqlrecov2 full of legitimate transactions.

The test script started printing a lot more error messages after some random customers got deleted but kept running anyway. So what are we going to do now? Well restoring the last Btrfs snapshot from before the deletion is a good start. We’ll turn off mysqlrecov1 and set mysqlrecov2 so that it can only be reached from the server itself, to keep people from tinkering with the data while we’re working on it.

Seems I chose the right snapshot. We have customer with ids below 5000. Now let’s check in the relay-log from the data_borked directory. We don’t want MySQL to use that directory any more because the data in ib_logdata-files and the sysbench-directory reflects the state where thousands of customers are missing. But the relay-log file is still very valuable. If we run mysqlbinlog –verbose /var/lib/mysql/data_borked/mysqlrecov1-relay-log.00005 we see all the changes made to the database since replication began. This data gets rotated out eventually but here neither time nor space-constraints led any of the changes to be lost before the “mistake” was discovered. We only really need the changes made from the point the latest good snapshot is from to make this work though.

So how does the binlog look?

SET @@SESSION.GTID_NEXT= 'b6efad3f-6c71-11ea-9af3-eaa32fa23308:1586'/*!*/;

# at 472

#200328 22:41:23 server id 1 end_log_pos 337 CRC32 0x633797ec Query thread_id=3 exec_time=0 error_code=0

SET TIMESTAMP=1585435283/*!*/;

SET @@session.pseudo_thread_id=3/*!*/;

SET @@session.foreign_key_checks=1, @@session.sql_auto_is_null=0, @@session.unique_checks=1, @@session.autocommit=1/*!*/;

SET @@session.sql_mode=1436549152/*!*/;

SET @@session.auto_increment_increment=1, @@session.auto_increment_offset=1/*!*/;

/*!\C utf8 *//*!*/;

SET @@session.character_set_client=33,@@session.collation_connection=33,@@session.collation_server=8/*!*/;

SET @@session.lc_time_names=0/*!*/;

SET @@session.collation_database=DEFAULT/*!*/;

BEGIN

/*!*/;

# at 550

#200328 22:41:23 server id 1 end_log_pos 399 CRC32 0x2b1e9803 Table_map: `sysbench`.`Customer` mapped to number 108

# at 612

#200328 22:41:23 server id 1 end_log_pos 480 CRC32 0xc9c162b2 Write_rows: table id 108 flags: STMT_END_F

BINLOG '

k9J/XhMBAAAAPgAAAI8BAAAAAGwAAAAAAAMACHN5c2JlbmNoAAhDdXN0b21lcgAEAw8DDwT/AP8A

CAOYHis=

k9J/Xh4BAAAAUQAAAOABAAAAAGwAAAAAAAEAAgAE//CF4AMAFGNiZnVkbmJmbWxtd2h1YWptbHht

AAAAAA94c3dqZnh1ZmhwZ3NpbWGyYsHJ

'/*!*/;

### INSERT INTO `sysbench`.`Customer`

### SET

### @1=254085

### @2='cbfudnbfmlmwhuajmlxm'

### @3=0

### @4='xswjfxufhpgsima'

# at 693

#200328 22:41:23 server id 1 end_log_pos 511 CRC32 0x0a071603 Xid = 46

COMMIT/*!*/;

# at 724

#200328 22:41:24 server id 1 end_log_pos 576 CRC32 0x05680f0e GTID last_committed=1 sequence_number=2 rbr_only=yes

/*!50718 SET TRANSACTION ISOLATION LEVEL READ COMMITTED*//*!*/;

SET @@SESSION.GTID_NEXT= 'b6efad3f-6c71-11ea-9af3-eaa32fa23308:1587'/*!*/;

# at 789

#200328 22:41:24 server id 1 end_log_pos 654 CRC32 0x79a206c9 Query thread_id=12 exec_time=0 error_code=0

SET TIMESTAMP=1585435284/*!*/;

BEGIN

/*!*/;

# at 867

#200328 22:41:24 server id 1 end_log_pos 716 CRC32 0xa2bb0bb0 Table_map: `sysbench`.`Customer` mapped to number 108

# at 929

#200328 22:41:24 server id 1 end_log_pos 797 CRC32 0xf9f2cb8b Write_rows: table id 108 flags: STMT_END_F

<...>

Okey, slightly obscure. Can we find our DELETE FROM Customer WHERE id < 5000? No. We only find thousands of these:

SET @@SESSION.GTID_NEXT= ‘b6efad3f-6c71-11ea-9af3-eaa32fa23308:26067’/*!*/;

# at 14852899

#200328 23:02:33 server id 1 end_log_pos 14852762 CRC32 0xb87efd39 Query thread_id=4 exec_time=0 error_code=0

SET TIMESTAMP=1585436553/*!*/;

BEGIN

/*!*/;

# at 14852975

#200328 23:02:33 server id 1 end_log_pos 14852825 CRC32 0x6a5f0dd7 Table_map: `sysbench`.`Purchase_list` mapped to number 110

# at 14853038

#200328 23:02:33 server id 1 end_log_pos 14852894 CRC32 0x53b1b9b6 Delete_rows: table id 110 flags: STMT_END_F

BINLOG ‘

idd/XhMBAAAAPwAAANmi4gAAAG4AAAAAAAEACHN5c2JlbmNoAA1QdXJjaGFzZV9saXN0AAQDAwMD

AADXDV9q

idd/XiABAAAARQAAAB6j4gAAAG4AAAAAAAEAAgAE//Dw5AEAlMQAAKJQAAAEAAAA8PLkAQCUxAAA

n3MAAAYAAAC2ubFT

‘/*!*/;

### DELETE FROM `sysbench`.`Purchase_list`

### WHERE

### @1=124144

### @2=50324

### @3=20642

### @4=4

### DELETE FROM `sysbench`.`Purchase_list`

So what is the latest transaction before the first of these thousands of DELETE-operations? Well, I know approximately what time we’re interested in. Then we can just look for DELETE FROM `sysbench`.`Customer`-statements.

So this probably contains the data we’re interested in:

### @2='trirwseikshnmaeuqgzr'

### @3=3522

### @4='peahbpigywdapfo'

# at 14574860

#200328 23:01:06 server id 1 end_log_pos 14574678 CRC32 0xab48065f Xid = 758546

COMMIT/*!*/;

# at 14574891

#200328 23:01:07 server id 1 end_log_pos 14574743 CRC32 0x18eab10c GTID last_committed=24333 sequence_number=24334 rbr_only=yes

/*!50718 SET TRANSACTION ISOLATION LEVEL READ COMMITTED*//*!*/;

SET @@SESSION.GTID_NEXT= 'b6efad3f-6c71-11ea-9af3-eaa32fa23308:25919'/*!*/;

# at 14574956

#200328 23:01:07 server id 1 end_log_pos 14574821 CRC32 0xe10dc87f Query thread_id=16 exec_time=0 error_code=0

SET TIMESTAMP=1585436467/*!*/;

SET @@session.foreign_key_checks=0/*!*/;

BEGIN

/*!*/;

# at 14575034

#200328 23:01:07 server id 1 end_log_pos 14574883 CRC32 0x6e71054b Table_map: `sysbench`.`Customer` mapped to number 108

# at 14575096

#200328 23:01:07 server id 1 end_log_pos 14583060 CRC32 0x65200e62 Delete_rows: table id 108

All right, what is the last state found in the last good snapshot? SHOW SLAVE STATUS says that Read_Master_Log_Pos is 14507560. That’s a reasonable number given that we just found the marker “end_log_pos 14574678” just above where all the bad deletes started. It’s important to know if 14507560 is an end_log_pos or a start position. Searching through the binlog-output reveals that it is an end_pos:

### @3=1682

### @4='vlntfrggrwhykju'

# at 14507742

#200328 23:00:00 server id 1 end_log_pos 14507560 CRC32 0x333e7f0b Xid = 753352

COMMIT/*!*/;

# at 14507773

So we will want to replay from the start position 14507773 that comes directly after the number we found in SHOW SLAVE STATUS. And the final position to include is 14574678.

Okey, so we were able to apply parts of the binlog without running into “DUPLICATE KEY” errors or losing lots of customer data. Shall we try to apply the transactions after the erroneous deletes? Should be as simple as replaying the binlog from position 14766269 to the end.

root@mysqlrecov2:/var/lib/mysql/data_borked# mysqlbinlog --skip-gtids --start-position=14766269 mysqlrecov2-relay-bin.000005 > after_deletes.sql

root@mysqlrecov2:/var/lib/mysql/data_borked# mysql < after_deletes.sql

root@mysqlrecov2:/var/lib/mysql/data_borked#

Seems okey. According the output from the testscript one of the last transactions was a purchase registered to customer 8786 with a cost of 351.

root@mysqlrecov2:/var/lib/mysql/data_borked# mysql

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 9

Server version: 5.7.29-0ubuntu0.18.04.1-log (Ubuntu)

Copyright (c) 2000, 2020, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> USE sysbench;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> SELECT * FROM Purchase WHERE customer_id = 8786;

+-------+-------------+------+--------+

| id | customer_id | cost | weight |

+-------+-------------+------+--------+

| 58333 | 8786 | 351 | 745 |

+-------+-------------+------+--------+

1 row in set (0.00 sec)

mysql>

If this had been a live system I would have been okey with bringing this online again. Since this was a small database a mysqldump and restore back on the master would work. For a bigger system maybe promoting the fixed slave is better and making the previous master into the new slave. Anyway, the testscript agrees that the data is correct:

cjp@kdemain:~/sysbench$ bin/python3.7 ownsysbench.py --hosts 192.168.1.155 --check true

192.168.1.155

Connection to 192.168.1.155: <mysql.connector.connection.MySQLConnection object at 0x7f05b26329d0> by X

self.conn.in_transaction: False

Checking correctness

Numbers check out on a high level. Checking each customer.

cjp@kdemain:~/sysbench$

Oh right, I promised to get back to why we would want to avoid making Btrfs snapshots on the master server. Btrfs and ZFS are not considered ideal for database storage. See for instance the wonderfully titled blog post https://blog.pgaddict.com/posts/friends-dont-let-friends-use-btrfs-for-oltp

It’s a few years old by now but I don’t doubt that Btrfs is still terrible for your database storage if you want to maximize throughput. My real world databases sees maybe 10-20 queries per seconds. My backup software can peak at hundreds per second(I blame the Java ORM-layer, not the me the programmer) for short bursts. Btrfs doesn’t slow that down noticeably. If someone asks me to setup a system that sees sustained loads of 5000 transactions per second I’ll ditch Btrfs in an instant on the master and only use it on the slaves. Because slaves typically don’t do much work. They just stream the results that result from all the work done on the master.

Anyway, I think those are the building blocks for safeguarding MySQL databases. There are some additional tweaks like having one slave intentionally trail behind the master a certain number of minutes to make recovery from bonehead mistakes easier. There are also more synchronous solutions like Galera(I’ve used Percona’s XtraDB variant) to minimize the gap during which a write to a master might be lost because it hasn’t reached any slaves yet.